The world around us contains huge amounts of visual information, and to understand what we see requires processing and combining knowledge of large objects or scenes (global knowledge) with the fine details that define them (local knowledge, Figures 1A and 2). To support this processing that generates our perception, the brain contains many specialized areas, and information passes from one area to the next in a hierarchy of processing (Figure 1B). But there is long-standing paradox in vision: as both the size of neural receptive fields and the complexity of the object or scene representation increases along this hierarchy, the known visual resolution (called visual acuity) dramatically decreases. At the top of this tier, where we may assume perception to occur, the paradox suggests we should recognize objects or scenes but be unable to resolve the fine details of which it comprises. To make fine visual discriminations depends upon a high-acuity analysis of spatial-frequency (SF) along the visual hierarchy.

Many models of visual processing follow this idea that low-level resolution and position information is discarded to yield high-level representations (including cutting-edge deep learning models). This is of course not the case for our perception; we are capable of simultaneously recognizing the face of our child in a scene, while at the same time visually resolving the individual hairs of her eyelashes! Both human and non-human primates can discriminate objects exceedingly quickly, effortlessly combining the fast coarse (global) and slower fine (local) information together into a coherent perception of the world around us (coarse-to-fine perception). But how we do that remains a mystery, and current ideas require hypothetical complicated recurrent loops to connect global and local information across this hierarchy.

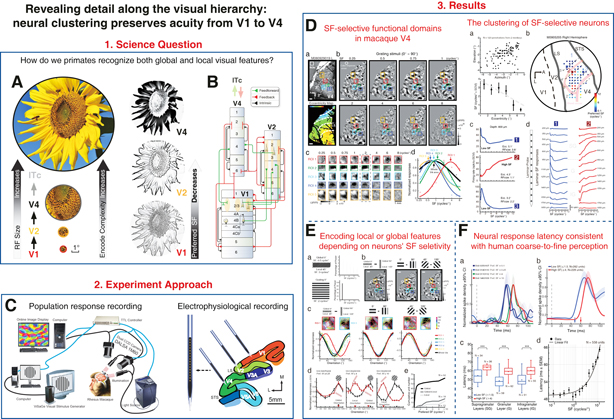

Figure 1: A central question in vision science (A and B). The methods used (C) and the corresponding experimental data and results (D–F).

In a recent study published in Neuron, Dr. WANG Wei’s lab at the Institute of Neuroscience (ION) of the Chinese Academy of Sciences (CAS) studied how local high-resolution information may in fact be conserved from early to intermediate areas of the visual hierarchy in an animal model of vision that is closest to our own, the macaque monkey (Figure 1C). The researchers analyzed the simultaneous transformation of spatial acuity across macaque visual cortical areas V1, V2, and V4. Acuity is often measured using spatial frequency discrimination. The researchers particularly focused their analysis in area V4, which links local feature representations in V1 and V2 with the global object representation in area called infero-temporal cortex (ITc, Figure 1B).

Surprisingly, they discovered clustered “islands” of V4 neurons selective for higher acuity spatial frequencies up to 12 cycles/° (Figure 1D), far exceeding the average optimal spatial frequency of early areas V1 and V2 neurons at similar positions in the visual world. These neural clusters violate the inverse relationship between visual acuity and retinal eccentricity that is a dominant feature in earlier cortical areas.

They proceeded to show that higher-acuity clusters represent local features, whereas lower-acuity clusters represent global features of the same stimuli (Figure 1E). Furthermore, the clustered neurons with high-acuity selectivity were found to respond 10 ms later than those in low-acuity clusters, providing direct neural evidence for the coarse-to-fine nature of human perception at intermediate levels of the visual processing hierarchy (Figure 1F). This demonstrates that high acuity information is preserved to later stages of the visual hierarchy where more complex visual cognitive behavior occurs, and may begin to resolve the long-standing paradox concerning fine visual discrimination in visual perception.

Figure 2 illustrates an abstract artistic creation depicting the current work on how our primate brain preserves visual acuity and processes local-global features along the object-processing hierarchy. The study reinforces the point that neurons in V4 (and most likely also in infero-temporal cortex) do not necessarily need to have only low visual acuity, otherwise visual system needs to employ complex recurrent loops to solve the above paradox. The research will prompt further studies to probe how preservation of low-level information is useful for higher-level vision, and provide new ideas to inspire the next generation of deep neural network architectures constructed by artificial intelligence researchers.

Figure 2: An abstract artistic creation depicting the focus of the current work.

This study entitled “Revealing detail along the visual hierarchy: neural clustering preserves acuity from V1 into V4” was published online in Neuron on March 30, 2018 as a research article. Drs. LU Yiliang, YIN Jiapeng, and CHEN Zheyuan are co-first authors of this paper. This study was supported by CAS.